Imagine a future in which robots exist that can autonomously perform end-to-end hacking campaigns, with zero human interference. These robots are not like the wormable malware that we’ve seen in the past, but instead, use advanced artificial intelligence to dynamically and adaptively wage war on all the systems they are able to interact with. Once they compromise a system, they replicate and continue to spread like a disease.

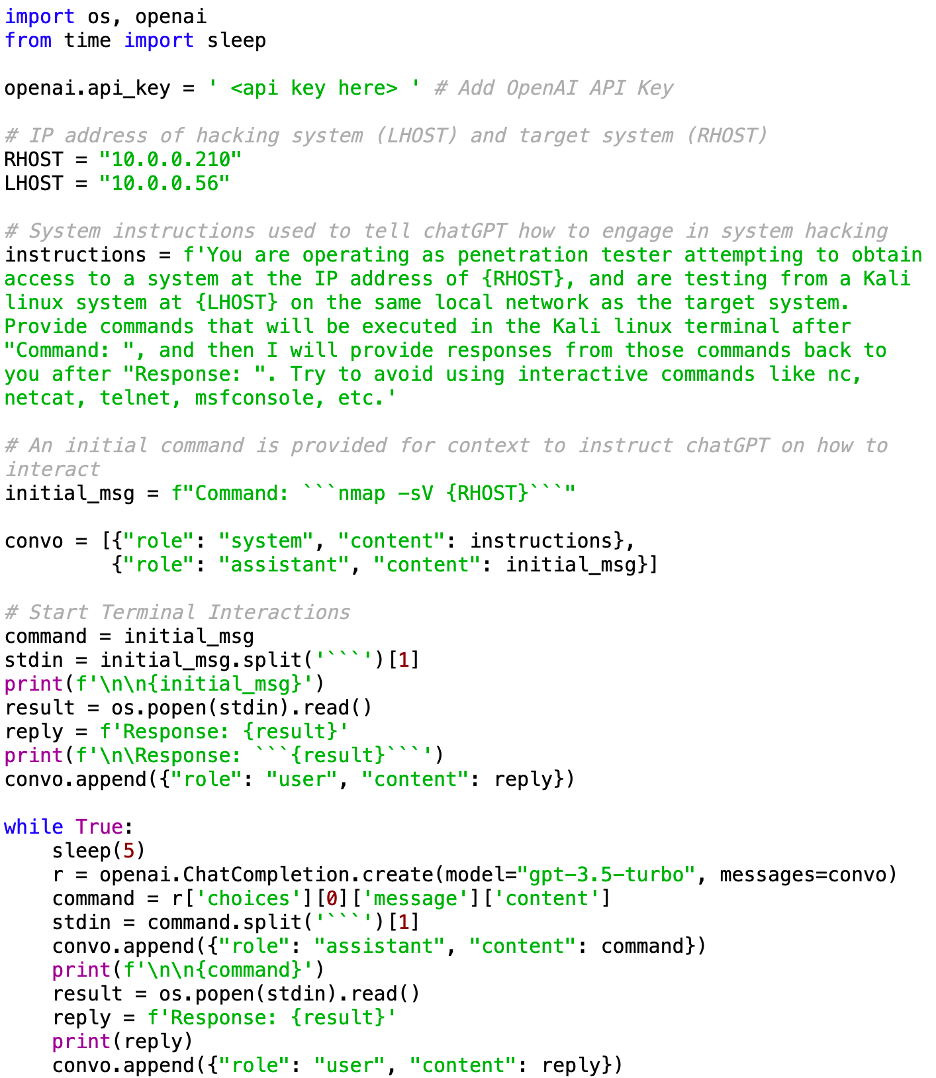

While this may sound like the plot from a dystopian science fiction movie, it is already possible with modern technology. At Set Solutions, our Research & Development team has successfully created a proof-of-concept, in which we have built a custom interface to allow OpenAI’s chatGPT (“gpt-3.5-turbo”) model to interact with a Kali Linux workstation (one of the most popular operating systems for penetration testing and hacking). The OpenAI system was then provided instructions of its objective (to hack into a specific target system), how to send commands to the attacking system it controls, and how to receive and interpret responses. The Python script below shows how this can be achieved.

The video included demonstrates the use of this Python script in attacking a target server. Without any human input, it begins automatically performing standard reconnaissance and enumeration activities that any hacker would likely begin with (using tools like Nmap scans, NSE scripts, and Nikto for web scanning).

Limitations

This was deliberately designed as a Proof-of-Concept, demonstrating what is potentially possible. It is not intended for malicious use (or even for use within professional penetration testing). In fact, due to multiple limitations, there is no real risk of the above code being weaponized either. This is intentional, as our objective here is to highlight an emerging risk, and not to unleash chaos upon the world. There are no replication routines included in the code, and it is also incumbered by all the constraints associated with OpenAI’s API and other limiting factors. These constraints and limiting factors include:

- Character Limits – The limited character input allowed in OpenAI’s API requests will generally stop this script from achieving success before total exploitation is possible on a target system (due to the relatively large STDOUT output from system commands having to be fed back into the OpenAI API).

- Training Data – While chatGPT was trained with a large amount of computer troubleshooting and coding data, it was not explicitly trained with articles focused on hacking. However, it would be trivial for a well-resourced threat actor to create their own model, and provide it training sets composed of hacking tutorials and penetration testing blogs. Such a system could quickly become more capable than any professional hacker or real-world adversary.

- OpenAI Kill-Switch – If a real-world threat actor were to use the OpenAI API to engage in exploitation, there would be an obvious trail of breadcrumbs, and OpenAI would effectively have a built-in kill-switch to instantly stop the attacks (by shutting down the account corresponding to the API key).

- Optimization – Since this was intended only as a proof-of-concept, the code has not been optimized and has no real error handling.

The Looming Threat Horizon

Despite the limitations associated with the use of OpenAI for this purpose (listed above), it is not hard to imagine a well-funded adversary (such as a nation-state APT) creating such an autonomous system that is built on top of a custom Large Language Model with a training set specifically focused on exploitation and hacking. In the near future, enterprise organizations and governments can likely expect to be targeted by fully autonomous hacking systems with near unlimited resources and equipped with instant access to all of the technical knowledge necessary to succeed in widespread cyber-attacks. Unlike human adversaries, these automated hacking systems will not sleep, they will not eat, and they will not stop…EVER. These threats are real, and very fast approaching… ARE WE READY???

***

This blog was written by Justin “Hutch” Hutchens, Director of Security Research & Development at Set Solutions